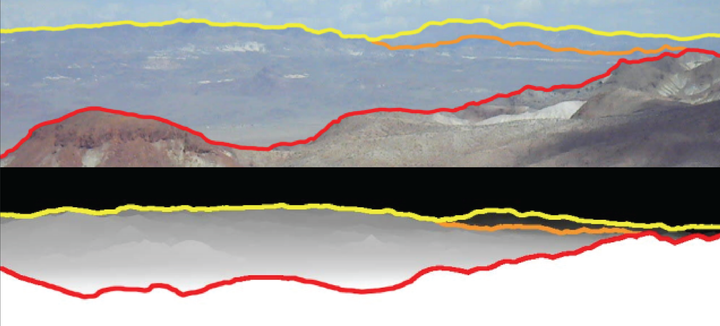

Query skyline (top) and matched synthetic database skyline (bottom)

Query skyline (top) and matched synthetic database skyline (bottom)

Abstract

We propose a system for user-aided visual localization of desert imagery without the use of any metadata such as GPS readings, camera focal length, or field-of-view. The system makes use only of publicly available digital elevation models (DEMs) to rapidly and accurately locate photographs in non-urban environments such as deserts. Our system generates synthetic skyline views from a DEM and extracts stable concavity-based features from these skylines to form a database. To localize queries, a user manually traces the skyline on an input photograph. The skyline is automatically refined based on this estimate, and the same concavity-based features are extracted. We then apply a variety of geometrically constrained matching techniques to efficiently and accurately match the query skyline to a database skyline, thereby localizing the query image. We evaluate our system using a test set of 44 ground-truthed images over a 10,000km2 region of interest in a desert and show that in many cases, queries can be localized with precision as fine as 100m2.